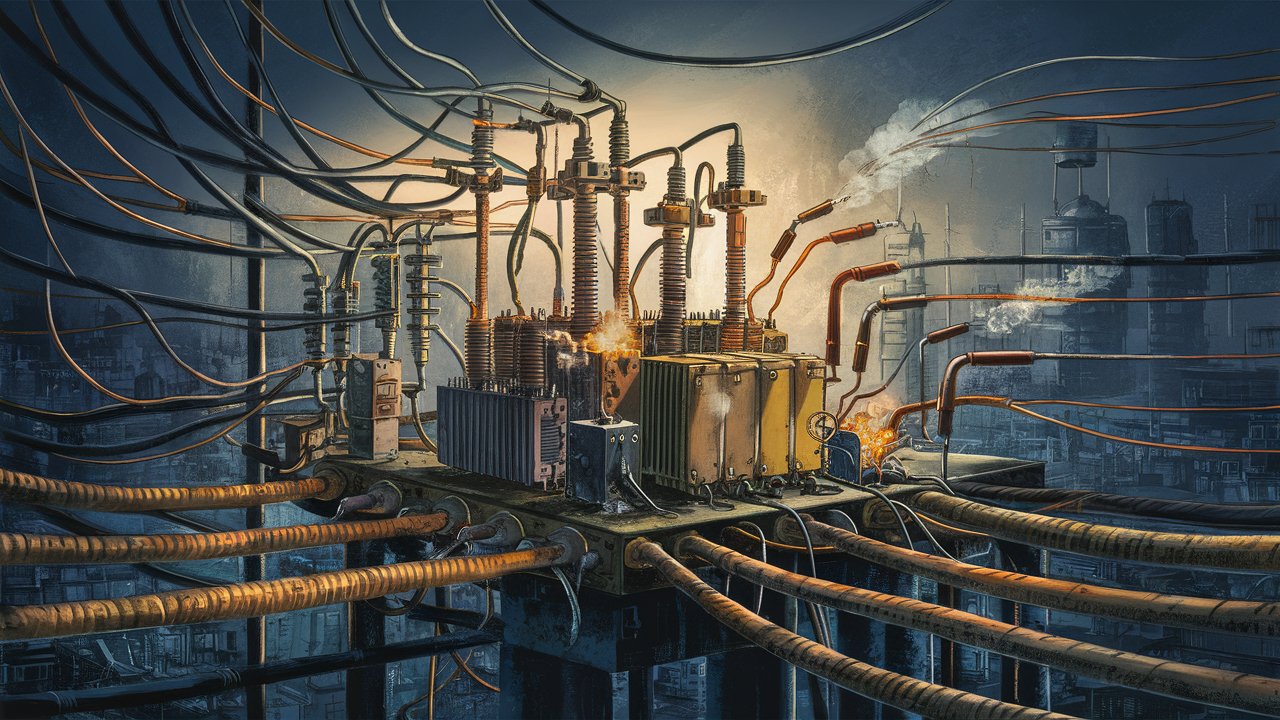

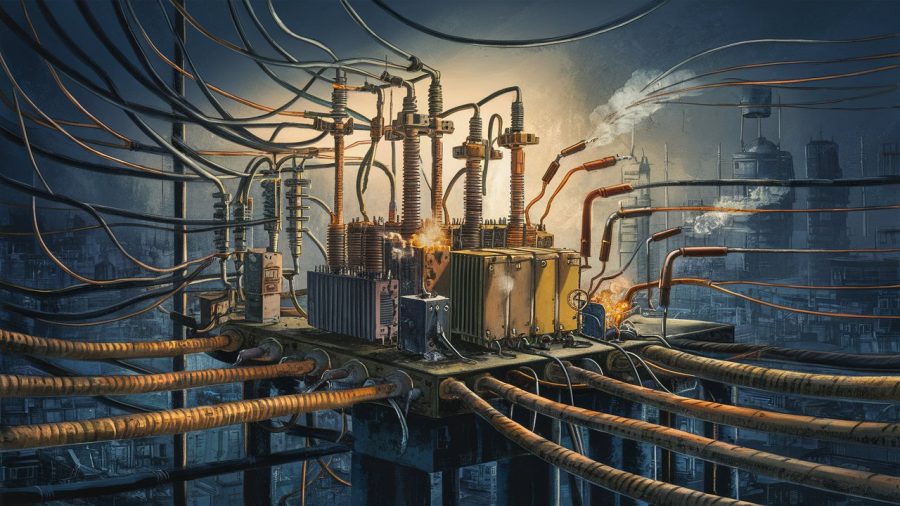

The expansion of generative AI requires big quantities of water and power and the US grid is struggling to manage.

As AI has taken off in the previous few years, new knowledge facilities are popping up throughout the nation to assist the paid acceleration of AI. Studying fashions want such knowledge facilities to offer the huge quantity of computing sources required to coach and deploy advanced machine studying fashions and algorithms.

Nonetheless, knowledge facilities additionally require an enormous quantity of energy to run and keep them, in addition to water to chill the servers inside. Issues are rising about whether or not the US energy grid can generate sufficient electrical energy for the rising variety of vital knowledge facilities. Whereas AI has been helping to improve sustainability in some fields, if it’s not a sustainable expertise in itself, it gained’t be doing any good for the planet.

What might be carried out to save lots of power within the AI house?

These working in AI manufacturing each on a {hardware} and software program stage are working to mitigate these drains on power and sources, in addition to investing in sustainable energy sources.

“If we don’t begin fascinated with this energy drawback in a different way now, we’re by no means going to see this dream now we have,” Dipti Vachani, head of automotive at Arm, informed CNBC. The chip firm’s low-power processors are getting used increasingly by big firms like Google, Microsoft, Oracle and Amazon as a result of they will help to cut back energy use by as much as 15% in knowledge facilities.

Work can be being carried out to cut back how a lot power AI fashions want within the first place. For instance, Nvidia’s newest AI chip, Grace Blackwell, incorporates Arm-based CPUs that may supposedly run generative AI fashions on 25 occasions much less energy than the earlier technology.

“Saving each final little bit of energy goes to be a essentially totally different design than whenever you’re attempting to maximise the efficiency,” Vachani mentioned.

Nonetheless, issues stay that these measures gained’t be sufficient. In spite of everything, one ChatGPT question makes use of practically 10 occasions as a lot power as a typical Google search, whereas producing an AI picture can use as a lot energy as charging a smartphone totally.

The results are being seen most clearly with the bigger firms, with Google’s newest environmental report displaying greenhouse gasoline emissions rising practically 50% between 2019 and 2023, partly due to knowledge heart power consumption. That’s regardless of claims from Google that knowledge facilities are 1.8 occasions as power environment friendly as a typical knowledge heart. Equally, Microsoft’s emissions rose practically 30% from 2020 to 2024, additionally due partly to knowledge facilities.

Featured picture: Unsplash

Trending Merchandise

![CRATIX 360°Rotatable and Retractable Car Phone Holder, Rearview Mirror Phone Holder [Upgraded] Universal Phone Mount for Car Adjustable Rear View Mirror Car Mount for All Smartphones](https://m.media-amazon.com/images/I/410N7NZtIjL._SS300_.jpg)

![Car Phone Holder Mount, [Military-Grade Suction & Super Sturdy Base] Universal Phone Mount for Car Dashboard Windshield Air Vent Hands Free Car Phone Mount for iPhone Android All Smartphones](https://m.media-amazon.com/images/I/51KK2oa9LDL._SS300_.jpg)